Can NVIDIA Maintain an Edge in the Inference-First Era? Next Gen AI & GPU Leadership (2025-2030)

Many think that inference-focused companies like Cerebras, Groq, and custom chips (TPUs) will dethrone NVIDIA as the top dog...

As the AI revolution accelerates, a fundamental shift is underway—from the days when training massive models was the primary focus to an era where real‑time, inference‑driven “reasoning” models dominate.

Next‑generation systems, such as OpenAI’s O3 and DeepSeek’s R1‑Zero, are pushing the boundaries of what’s possible by integrating longer sequence lengths, search‑based chain‑of‑thought (CoT) methods, and extensive memory for key‑value caches.

These advances aren’t just incremental improvements—they multiply the compute demands during inference, creating an insatiable appetite for high‑memory, high‑bandwidth GPU clusters.

Amid this transformation, NVIDIA remains at the center of the AI hardware ecosystem. Through rapid strategic pivots—exemplified by its swift transition from the GB200 to the more advanced GB300—the company is addressing the emerging needs of complex inference workloads head‑on.

With enhanced memory capacity, increased FLOPs, and innovative interconnect solutions like NVL72, NVIDIA’s platforms are uniquely positioned to handle the intricate requirements of these new reasoning models.

While niche competitors and custom chip initiatives (from Groq, Cerebras, and cloud providers’ TPUs to emerging ASICs) capture parts of the market, the robust, integrated CUDA ecosystem, plug‑and‑play solutions, and proven reliability of NVIDIA’s hardware continue to make it the default choice for a vast majority of AI labs and enterprises.

NVIDIA’s agile strategy positions it to maintain (possibly even expand) its lead over competitors from 2025-2030 — as the risks of completely weaning off of NVIDIA and its ecosystem may outweigh gains from alternative solutions (at least in the near term).

RELATED: NVIDIA GPUs vs. Custom Chips: Who Wins the War?

The Inference-First Landscape in AI (2025–2030)

Traditionally, AI labs focused efforts on scaling up training (building larger models, feeding them massive datasets, and pouring enormous compute power to improve performance).

This approach relied on static pretraining: once the model was trained, its knowledge was basically “frozen” and additional improvements were contingent upon increasing model size and training duration.

Over time, this method (pure LLM scaling or scaling up pretraining) showed diminishing returns for various reasons: saturation of learnable info, rising costs, efficiency limits, etc. (Beyond a certain point — adding more parameters and synthetic data doesn’t do much… so it’s not cost effective to continue.)

Now nearly all cutting-edge labs have pivoted toward scaling “inference” (test-time compute). Instead of relying on massive training runs, inference scaling leverages things like: chain-of-thought reasoning, search-based methods, multi-path sampling — to dynamically refine a model’s output at test time.

Labs are rapidly scaling inference and don’t see any end in sight… an inference wall will eventually come, but probably not anytime soon.

RELATED: Top 7 HPC Stocks for AI Inference Growth (2025-2030)

Novel “Reasoning” Models (e.g., O3 from OpenAI, R1-Zero from DeepSeek)

Beyond simple next-token prediction: Next-generation systems like O3 and R1-Zero integrate longer sequence lengths, search-based CoT (chain-of-thought) methods, and extensive memory for key-value caches. These models no longer just spit out a single forward pass response—they engage in multi-step reasoning or parallel CoT expansions, which multiplies compute needs at test time.

Greater inference compute: Each user query can involve iterative reasoning steps, significantly increasing total FLOPs required relative to simpler LLM inference. Long context windows (50k+ tokens) further inflate memory and bandwidth demands.

Shifting Economics

“Buy” reliability with more test-time resources: Models like O3 demonstrate that deeper reasoning or multi-path sampling can yield more accurate, trustworthy outputs. However, this also burns more GPU cycles and memory at inference time.

Budgets move from training to inference: As advanced AI is productized (chatbots, enterprise assistants, generative content), success hinges on real-time speed and reliability. Consequently, companies now invest more heavily in inference infrastructure.

Inference as (re)training: Systems such as R1-Zero generate verifiable data during inference—effectively self-improving. By labeling or verifying outputs on-the-fly, they produce new training data. The more these systems are used, the more compute they demand, creating a positive feedback loop of inference usage.

R1-Zero’s Significance

No human fine-tuning necessary: While earlier “reasoning” systems (e.g., O1, O3) rely on supervised fine-tuning (SFT) for improved chain-of-thought, R1-Zero achieves strong domain-specific reasoning purely through reinforcement learning.

Massive scaling potential: Removing humans from the loop lets labs rapidly expand domain coverage. However, each iteration still consumes large inference cycles—amplifying overall demand for hardware.

Machine-driven data creation: R1-Zero’s approach is a prototype for the future: as systems generate their own high-quality data, it triggers more iterative training or fine-tuning, which again supercharges inference requirements.

Implication: All these factors drive a huge appetite for high-memory, high-bandwidth, and advanced parallel GPU clusters. Here, NVIDIA has long been investing—giving them a significant lead.

RELATED: DeepSeek Served as an “NVIDIA Killer” Psyop

NVIDIA’s Strategic Shifts (2024-2025): Blackwell GB200 → GB300 (Mid-Year Upgrades)

Inference Roadmap Pivots

Accelerated memory and interconnect upgrades: NVIDIA saw that advanced inference (reasoning, huge context windows) would place heavier demands on memory throughput and capacity than prior generation LLM inference.

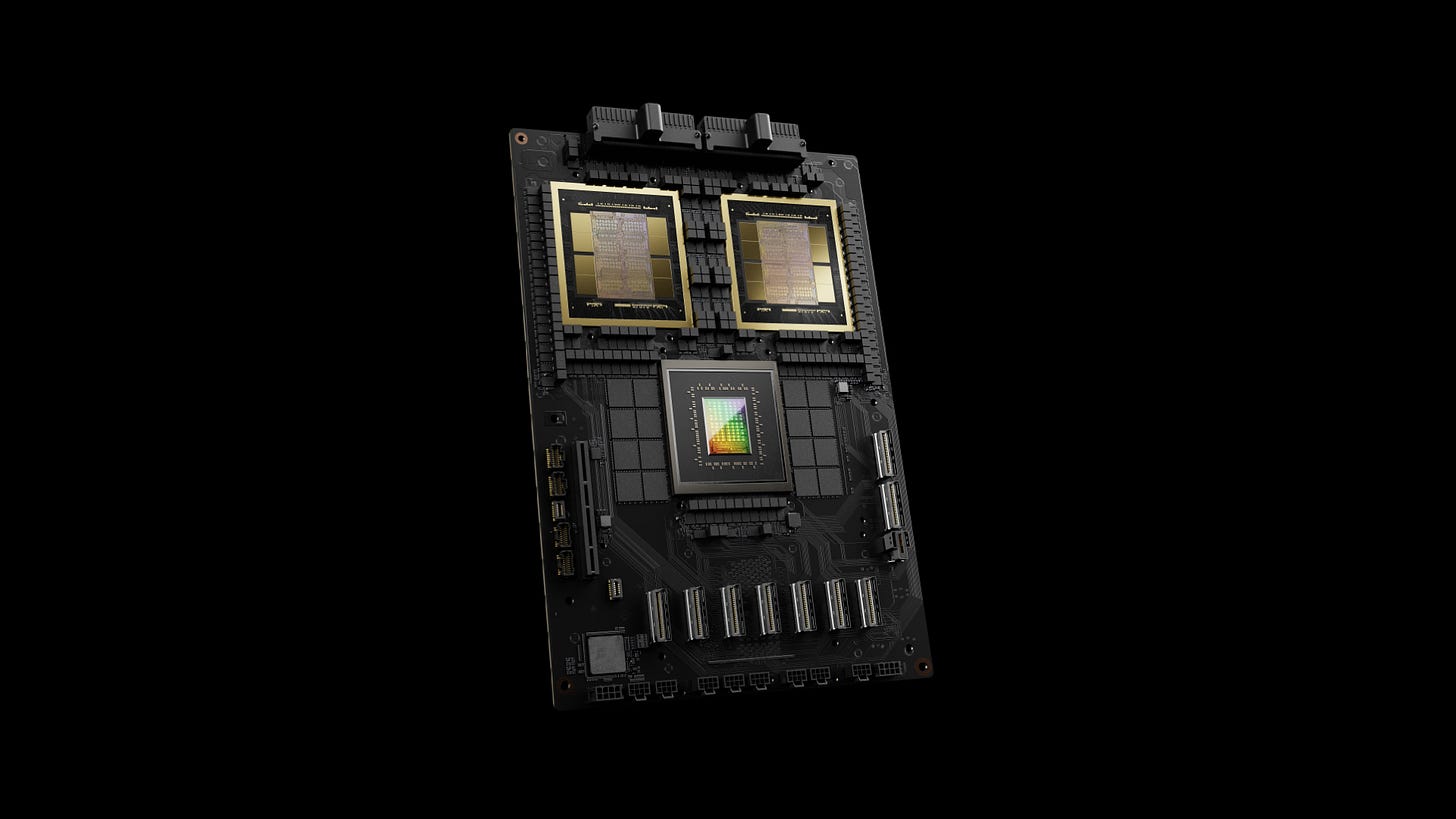

GB200 to GB300: In just 6 months, NVIDIA introduced mid-cycle updates (B300 compute die on TSMC 4NP, more HBM stacks, improved networking). This responsiveness underscores NVIDIA’s capacity to rapidly adapt to evolving AI workloads.

Supply Chain Overhaul

From “Bianca” boards to “SXM Puck”: Instead of providing an entire integrated board (as with GB200’s Bianca reference), NVIDIA’s GB300 design is modular. Hyperscalers like Amazon, Google, or Microsoft can customize board-level components (cooling, NICs, VRMs) while NVIDIA remains the core GPU supplier.

Benefitting both sides: Labs get flexibility in networking or custom CPU attachments; NVIDIA still sells the core compute modules. This preserves NVIDIA’s margins and leadership, while distributing manufacturing complexity.

Takeaway: NVIDIA identified the shift to high-powered inference quickly enough to preempt losing ground. By pivoting to more memory- and interconnect-focused designs, they stay ahead of specialized rivals.

GB300’s Key Enhancements

Memory Capacity

12-Hi HBM3E for ~288 GB total: Critical for extremely large context windows (e.g., O3, R1, or MoE-based reasoning) and storing big KV caches.

Drastically improved throughput: More memory lanes keep up with advanced CoT expansions, enabling better “tokenomics” for large-batch or multi-user inference.

Increased FLOPs & TDP

~50% performance bump over GB200: Achieved via TSMC 4NP re-tape, architectural improvements, and TDP up to 1.4 kW for certain server SKUs.

Ideal for search-based or multi-path inference: Higher FLOP budgets allow parallel CoT expansions and sampling strategies that yield more accurate or thorough reasoning.

NVL72 Scaling

Multi-node synergy: Large-scale reasoning often benefits from pooling memory and compute across 72 interconnected GPUs for near-linear scaling.

100k+ token contexts: Distributed memory across 72 GPUs can handle massive chain-of-thought or multi-document references in real time, pushing the frontiers of “reasoning” model inference.

Hyperscaler Adoption

1.) Amazon

Improved TCO: Freed from earlier NVL36 constraints (due to in-house NICs), Amazon can adopt 400G solutions and water-cooling for the new GB300 boards—dramatically improving cost-efficiency.

Ramping up NVL72: Gains the same high-bandwidth synergy that Google, Meta, and Microsoft have leveraged with the prior generation.

2.) Meta

Multi-sourcing NICs: The modular “SXM Puck” design allows them to integrate 800G or custom solutions more freely.

Still reliant on NVIDIA for large HPC clusters: Even with in-house chip aspirations, the path of least resistance for next-gen inference remains NVIDIA’s robust ecosystem.

3.) Google

Balancing TPU usage: Though Google invests heavily in TPUs, certain memory-bound or highly dynamic inference tasks are better served by GPU clusters.

Dynamic chain-of-thought: The new GB300 architectures handle extended search-based inference particularly well, filling in gaps where TPU dataflow architectures might be suboptimal.

4.) Microsoft

Azure HPC + AI: Historically a bit slower to adopt major form-factor changes, Microsoft sees a significant advantage in bridging HPC resources with next-gen reasoning AI.

Customized GPU racks: The new supply chain model gives them more room to design specialized boards around their existing data center footprints.

End Result: All major hyperscalers see immediate benefits from upgrading to the more memory-rich, higher-FLOP GB300, effectively reinforcing NVIDIA’s position as the go-to vendor for large-scale, flexible reasoning systems.

RELATED: Top 8 HPC Stocks for DeepSeek-Style AI Scaling (2025-2030)

Brief Note on NVIDIA’s Rapid Inference Improvements

By zeroing in on memory capacity, throughput, and advanced multi-GPU interconnects—exactly what next-gen reasoning models demand—NVIDIA has quickly closed any perceived gap in cost/performance for inference.

As a result, it’s likely they’ll exceed many analysts’ expectations for how swiftly they can accommodate the “inference-first” era.

Common Misconception: “Inference Will Reduce NVIDIA’s Market Leadership”

Sources of the Misconception

Specialized ASICs: Competitors like Groq, Cerebras, or cloud ASICs (e.g., TPUs, Trainium) promote higher efficiency or lower cost-per-inference for specific workloads.

Less FLOPs Required: Compared to training, inference might appear less computationally intense—suggesting less need for NVIDIA’s high-end GPUs.

Assumed Overkill: Critics argue that GPUs’ general-purpose design is “overkill” for simpler inference tasks, pointing to custom accelerators as more optimized solutions.

Why the Reality Is More Complex

Advanced “Reasoning” Inference Demands:

Models like O3 and R1-Zero don’t just run a single forward pass; they engage in iterative chain-of-thought, search-based expansions, or multi-modal reasoning.

Result: Significantly more compute is required at inference time—often rivaling training in total FLOPs.

Memory & Bandwidth Bottlenecks:

Large context windows, parallel CoT expansions, and multi-user concurrency can easily saturate memory bandwidth.

GPU architectures that integrate high-bandwidth memory (HBM) and advanced interconnects (e.g., NVLink) excel at these tasks, whereas many ASICs focus narrowly on raw compute or a single dataflow pattern.

Software Ecosystem Needs:

AI labs value complete toolchains for inference: scheduling, multi-instance concurrency, real-time monitoring, reliability.

NVIDIA’s CUDA, TensorRT, and Triton servers remain the easiest route for large-scale deployments and rapid iteration.

Integrated Value Proposition (Synergy):

Although alternative chips might match or exceed NVIDIA in raw compute, memory capacity, or even bandwidth, NVIDIA’s strength lies in its integrated system.

The synergy between its hardware (high‑bandwidth memory, advanced interconnects) and software (CUDA, TensorRT, Triton) delivers consistent, scalable performance for complex, iterative inference tasks.

NVIDIA’s plug‑and‑play systems (e.g., DGX, SuperPOD) have been battle‑tested in production environments, ensuring that labs can deploy large‑scale inference solutions with minimal friction and high reliability.

Takeaway: While competitors do offer impressive raw hardware performance, the reality is more complex: advanced inference requires a combination of raw compute, efficient memory bandwidth, and a robust software ecosystem. NVIDIA’s integrated approach—where hardware and software work seamlessly together—makes it particularly well‑suited to meet these demanding needs at scale. This holistic integration is what sets NVIDIA apart, even if some competitors might win in individual spec comparisons.

Competitive Landscape: Cost vs. Ecosystem

Groq

Strengths: Ultra-low latency and potentially lower cost per token for certain inference tasks (e.g., dense streaming).

Limitations: Lean software stack, minimal HPC or multi-modal support, narrow developer community.

Cerebras

Strengths: Wafer-scale engine can store huge models or large batch in SRAM. Impressive for large LLM training or single “monster” inference.

Limitations: Scalability beyond one wafer can be tricky; specialized compiler environment, less plug-and-play in distributed inference contexts.

Read: When will Cerebras Systems Stock IPO?

Google TPU & Amazon Trainium

Keep reading with a 7-day free trial

Subscribe to ASAP Drew to keep reading this post and get 7 days of free access to the full post archives.