GPT-5: OpenAI's Unified Model After GPT-4.5 (Orion) & "o-Series" (Future Roadmap)

Looks like Sam Altman and Co. are rolling everything into one model for GPT-5... but only after they release GPT-4.5 (Orion)

OpenAI’s Generative Pretrained Transformer (GPT) series has come a long way—from early breakthroughs in GPT‑2 and GPT‑3 to widely recognized, conversation-ready models like ChatGPT (GPT‑3.5) and the more multimodal GPT‑4.

While these advances captured public imagination, they also created complexity: a “model picker” of overlapping versions, specialized reasoning add-ons (the “o-series”), and distinct performance tiers for different use cases.

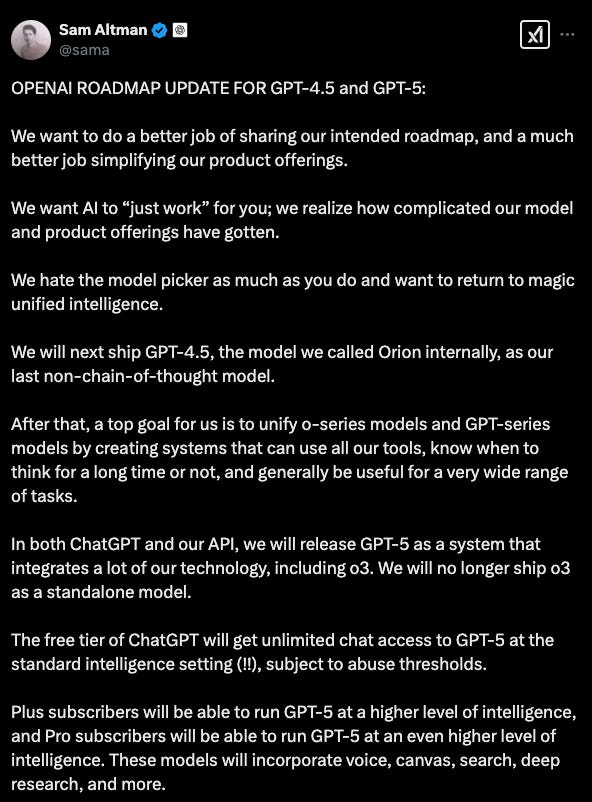

Responding to user feedback, OpenAI CEO Sam Altman recently outlined a streamlined roadmap designed to unify these offerings.

The plan centers around GPT‑4.5 (codenamed “Orion”) as the last purely “base” model and the imminent GPT‑5, which integrates advanced chain-of-thought (CoT) reasoning (previously known as “o3”) and multimodality into a single, adaptive system.

Note: Having a “single model” fixes the flak OpenAI got for naming various models in a way that confused the general population (e.g. GPT-4o, GPT-4, GPT-4o-mini, o3-mini, o3-mini-high, o1, o1-pro, etc.). I genuinely liked these names and distinctions, but many were too confused.

ChatGPT Evolution: GPT Models + O-Models (2025)

GPT‑1, GPT‑2, GPT‑3

Initial Foundations: The GPT journey started with GPT‑1 and GPT‑2, which showcased that large-scale language modeling on vast text corpora could yield remarkably fluent text generation. These models popularized the Transformer architecture and hinted at the potential for general-purpose language AI.

GPT‑3’s Breakthrough (2020): With 175 billion parameters (huge at the time), GPT‑3 demonstrated an ability to handle zero-shot tasks by simply reading examples in the prompt (“in-context learning”). Its uncanny language fluency quickly propelled it into the public eye, setting the stage for even larger, more capable successors.

GPT‑3.5 & ChatGPT

Refinement + RLHF: In late 2022, GPT‑3.5 introduced new training refinements and Reinforcement Learning from Human Feedback (RLHF), leading to an AI chatbot—ChatGPT—that delivered more coherent, context-aware conversations. ChatGPT’s rapid adoption fueled massive interest in large language models.

Widespread Adoption: By early 2023, ChatGPT (powered initially by GPT‑3.5) had become a household name, prompting myriad applications in coding assistance, tutoring, customer service, and more.

GPT‑4: Multimodal & More Capable

Released in Early 2023: GPT‑4 built on GPT‑3.5 by increasing context window sizes, improving factual reliability, and adding limited multimodality (handling images as well as text). It was more accurate overall—though still prone to occasional “hallucinations.”

Still a “Base” Model: Despite major improvements, GPT‑4 largely remained a single monolithic LLM without native chain-of-thought. Users could prompt GPT‑4 to produce stepwise logic, but advanced, dedicated reasoning required separate approaches—leading to “o-series” experiments.

The o-Series: Layering Advanced Reasoning (Inference Scaling)

Why the “o” Models?

Chain-of-Thought (CoT): Following GPT‑4, rumors emerged about “o-series” models—o1, o2, o3, etc.—specifically engineered to handle deeper reasoning. These models added a systematic chain-of-thought layer on top of the “base” GPT architecture.

Trade-Off: While the o-series excelled at complex math, logic, and problem-solving, they required more inference compute and were slower and costlier to run. As a result, users had to choose fast answers (the base GPT model) versus deep reasoning (o-series).

GPT‑4o & Beyond

Multimodal + Reasoning: Some references to “GPT‑4o” suggested a version of GPT‑4 that combined multimodality with advanced CoT. While powerful, it was not widely released as a single official product.

Fragmented Offerings: By mid-2023, OpenAI’s lineup began to look confusing: you might see references to GPT‑4 (base), GPT‑4.5 (an upcoming faster variant), or “GPT‑4o” (enhanced reasoning). This fragmentation spurred user frustration—a direct factor in Sam Altman’s vow to simplify.

GPT‑4.5 (“Orion”): The Last Pure “Base” Model

What GPT‑4.5 Brings

Speed & Efficiency: Often described as an optimized GPT‑4, GPT‑4.5 (Orion) promises improved inference speed, lower latency, and larger context windows (rumored: 256K tokens). It also aims for modest accuracy gains and better resource efficiency.

Not Fully Integrated CoT: Crucially, GPT‑4.5 remains in the “base model” tradition—it does not have advanced chain-of-thought baked into its architecture. This means it’s still best for general or simpler tasks, rather than specialized multi-step reasoning out of the box.

Why Call It the Last Base Model?

Diminishing Returns of Pure Scaling: Repeatedly increasing parameters or data sets yields smaller leaps in real reasoning. GPT‑4.5’s improvements are incremental but do not fundamentally solve the core issue of robust multi-step logic.

Transition to Unified AI System: Altman has explicitly stated that GPT‑4.5 is the final non-CoT release before the official pivot to GPT‑5, where base LLM capabilities and advanced CoT reasoning will merge into a single, integrated system.

Bridging the Gap: Orion is essentially a bridge: it gives users a refined “classic LLM” experience while OpenAI finishes preparing GPT‑5, which will do away with separate product lines altogether.

Sam Altman’s Roadmap: Simplified Product, New Inference Tiers

No More Model Picker (Simplicity)

“Magic” Unified Intelligence: Altman acknowledged the complexity in having separate GPT vs. o-series vs. “.5” updates. The new goal is to have a single GPT‑5 endpoint that internally decides whether to use “fast inference” or deeper CoT reasoning—no separate toggles for the user.

One System, Many Intelligence Levels: Instead of picking GPT‑4.5 or o3, users will simply use GPT‑5, which can ramp up or down its computational depth. This unification is meant to make AI “just work,” removing the friction of multiple model choices.

Tiered Access for GPT‑5

Free Tier: Notably, Altman announced unlimited chat at a “standard intelligence” setting for free users, an unexpected move that broadens AI accessibility.

Plus and Pro: Paying subscribers can request deeper chain-of-thought reasoning, possibly with longer inference or more advanced logic. This effectively replaces the separate “o-series” model picks with a slider or tier—the same GPT‑5, just different resource allocations.

Voice, Canvas, Search: GPT‑5 will also incorporate advanced features beyond text, such as voice-based interaction, “canvas” for visual/spatial tasks, and built-in search for real-time retrieval—unifying all these tools in one place.

GPT‑5: How “Base + Reasoning” Merge Behind the Scene

Speculative Architecture

True Integration: Rather than GPT‑4.5 (base) plus o3 (reasoning) as two separate components, GPT‑5 is expected to be trained from the ground up with chain-of-thought (CoT) as a native feature.

Automatic Mode Switching: Under the hood, GPT‑5 will still incorporate techniques from earlier base models but seamlessly apply advanced reasoning where needed. This means short queries can be answered quickly, while complex prompts trigger multi-step CoT pathways without extra user input.

Implications for Inference

Dynamic Resource Allocation: Users on the free tier likely get a “standard” inference level, which avoids heavy CoT to stay cost-effective and responsive. Meanwhile, Plus and Pro tiers can unlock deeper, more time- and compute-intensive CoT steps for advanced tasks (think extensive math proofs, coding assistance, or elaborate planning).

Unified API: For developers, GPT‑5 should appear as one endpoint rather than multiple specialized models. Parameters or usage tiers could signal how much reasoning to invoke, but the model remains the same under the hood.

Voice, Canvas, Search

Beyond Text: GPT‑5 aims to handle voice input and output, letting you speak queries or commands directly. A “canvas” feature may allow visual/spatial interaction—useful for sketching diagrams or workflows.

Integrated Search: For complex tasks requiring external data or real-time knowledge, GPT‑5 can automatically perform web lookups in the background, further reducing the need for separate “tool-using” model variants.

Evolving Upgrades: GPT‑5.5, GPT‑6, or Both?

Path A: Unified Releases Only

Straight to GPT‑6: Historically, OpenAI has introduced half-step versions (like GPT‑3.5, GPT‑4.5). But with GPT‑5 unifying base and reasoning, incremental improvements might simply roll into GPT‑5 until the next major milestone—GPT‑6—is warranted.

No More “o” Branding: In line with Sam Altman’s pledge, the “o‑series” name is likely gone for good; chain-of-thought enhancements will be standard.

Path B: .5 Versions + “Hidden” o-Series

Behind-the-Scenes Upgrades: It’s also possible that OpenAI keeps a .5 naming scheme (e.g., GPT‑5.5), quietly folding new reasoning levels in (e.g. if GPT-5 is based on o4-medium, then GPT-5.5 might be like o4-high or something).

Public Simplicity: Even if “o5” exists internally, it may never be offered as a separate product—merely rolled into GPT‑5.5 or GPT‑6, maintaining the simpler user-facing lineup.

Comparison to “Zero‑Base” Research (e.g., R1‑Zero)

Read: DeepSeek AI Debunked (2025): China’s ChatGPT with MoE

Fully RL-Based Approaches

DeepSeek’s R1-Zero: Recent research (such as R1‑Zero) demonstrates that advanced reasoning can emerge via reinforcement learning alone, without extensive human-labeled chain-of-thought. This is often called “zero‑base” because it forgoes a massive “base” LLM fine-tuned with CoT steps from humans. (Read: Top Stocks for DeepSeek-Style AI Scaling)

Narrow vs. Broad: While R1-Zero excels in domains like math or coding, it doesn’t yet match GPT’s wide coverage of general language tasks. GPT‑5 will still likely rely on large-scale text pretraining to maintain broad linguistic fluency.

Why GPT Likely Won’t Go “Zero‑Base”

User Familiarity: GPT models are prized for their skill across many domains (writing, coding, Q&A, creative tasks). Pure RL-based reasoners may not possess the same wide-ranging language facility unless they also incorporate large text corpora.

Hybrid Evolution: GPT‑5 may adopt some RL-based or self-play strategies (like automating reasoning steps), but will likely remain a hybrid of massive text pretraining + integrated chain-of-thought layers.

The Future for OpenAI Models (2025+)

Bigger Context Windows & More Multimodality

Continuous Improvement: Expect further expansions in GPT‑5’s context window, possibly surpassing GPT‑4.5’s rumored 256k tokens. This would allow the model to “remember” even longer conversation histories or entire documents at once.

Enhanced Modalities: With built-in voice, canvas, and search capabilities, GPT‑5 is edging closer to a general “AI assistant” that can handle diverse input and output formats seamlessly.

From Developers to End Users

API Simplification: Instead of multiple endpoints (e.g., GPT‑4.5 vs. o3), developers will call GPT‑5 and potentially specify “budget” parameters or subscription tiers. This unification lowers friction for building GPT-based apps.

Consumer Experience: For everyday users, ChatGPT with GPT‑5 means no more toggling “model” settings or waiting on separate betas. The system decides—behind the scenes—how deep to go in its reasoning, aligning with Altman’s “magic unified intelligence” vision.

Base GPT-4.5 (Orion) Evolves to GPT-5 (Unified)

With GPT‑4.5 (Orion) serving as OpenAI’s final pure base model, the shift toward GPT‑5 represents a major architectural and product pivot.

By merging advanced chain-of-thought reasoning directly into the model, OpenAI aims to eliminate the confusing “model picker,” streamline its product lineup, and offer flexible “intelligence tiers” within a single AI system.

Looking ahead, we can expect fewer model names but greater capability in each release.

No more “o‑series”—reasoning is now standard or “baked in.”

No more separate “base” vs. “reasoning”—it’s all GPT‑5 under one roof, with dynamic mode switching based on user needs.

Tiered Inference—from free to pro, letting users choose how much reasoning or compute they want to allocate.

Ultimately, this approach should simplify how both developers and end users engage with OpenAI’s technology, while simultaneously pushing GPT’s capacity for accurate, multi-step reasoning.

If all goes according to plan, GPT‑5 and its successors will feel like “one flexible AI engine,” seamlessly shifting from quick answers to thorough, stepwise problem-solving based on context and computational resources.

Thoughts on the Unified Framework for GPT-5…

Not necessarily a fan of this, but I get it for mainstream simplicity. All they really need to do is add multimodality to the reasoning models with longer memory and context window and we’re golden.

I WANT THE OPTION TO FORCE “HIGHEST” REASONING EVERY QUERY. (Will be gone with the new model. I don’t necessarily trust it to make the optimal decision. Sometimes even for simple questions I want to really grind the reasoning gears.)

The most obvious benefit of a unified model IMO? No more paradox of choice. Deciding which model to use can drain mental/cognitive resources (e.g. do I use o1-pro or o3-mini-high or just GPT-4o? etc.)

Something ultra-meta may be giving a switchboard between the current layout (with all the different models) AND the new unified variant (if this would even be possible).

If the unified variant gives me the best possible answers every query and draws only on the absolute best reasoning for every answer, I’ll like it.

If it shifts and makes “decisions” behind-the-scenes for me that might yield suboptimal output, I won’t. I don’t want a long PDF dissected with 4.5… I want the absolute best reasoning for 99% of my queries.

So we won’t really know if we’re getting the absolute highest performance (a lot more will likely be concealed with the unified model/framework).