Claude AI Sucks (2024): Usage Limits & Woke Censorship

I think Claude 3.5 Sonnet is the best, but hitting usage limits in the blink-of-an-eye + woke filters = bad value for $

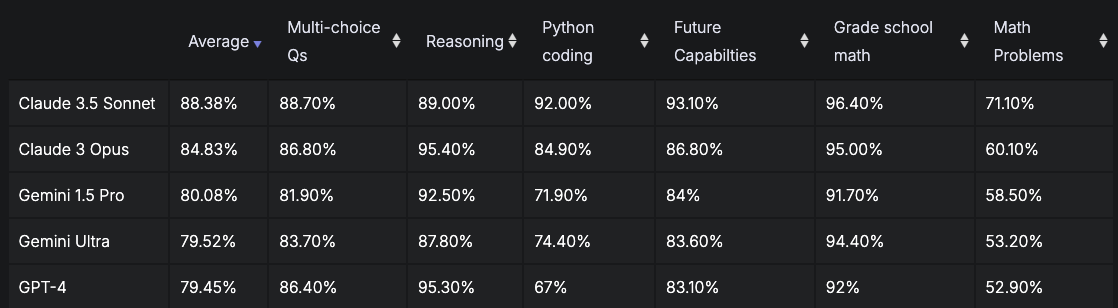

Claude 3 Opus was the top performing LLM for a while on most LLM leaderboards, then GPT-4o dethroned Claude 3 Opus, then Claude 3.5 Sonnet ousted GPT-4o as the top dog of non-CoT (Chain-of-Thought) AIs.

For some individuals, Claude 3.5 Sonnet remains the most useful AI (e.g. coding) and is the clear-cut current leader.

For most people and average AI users, Claude’s usage limits make it a horrendous value relative to other paid AIs like: ChatGPT and Gemini.

Claude 3.5 Sonnet is currently my favorite model (and I think it’s the best) followed by Google’s AI Playground model for Gemini - then maybe GPT-4o (but only without internet capabilities - as the auto/forced internet searches are garbage).

Note: Claude may improve upon many of these in the future. If you’re reading this in 2025 - perhaps they’ve addressed these issues.

(Read: OpenAI’s new o3 & o3-mini models)

Why Claude (Anthropic AI) Sucks in 2024

1. Severe Usage Limits (Messages, Tokens, File Uploads)

Claude’s restrictive usage limits are the most significant issue for users, particularly on the Pro and Team plans.

These constraints severely limit productivity, making Claude less viable for extensive, complex tasks.

Token Limits: Claude’s models, such as Claude 3.5 Sonnet, quickly deplete their token allowance during long conversations or tasks requiring frequent inputs. On the Pro Plan, users can send 45 short messages every 5 hours, which totals around 216 messages per day. Since Claude reprocesses the entire conversation with each input, users run out of tokens much faster than expected, especially when handling long-form discussions or multi-step workflows.

File Size Limits: Claude imposes restricted file size limits and limits the number of uploads relative to GPT-4o. Users who attempt to upload larger datasets or documents often find themselves hitting these limits, making it impractical for data-heavy workflows. By contrast, GPT-4o supports larger files, making it a better option for professionals working with complex or extensive datasets.

Message Frequency Resets: The 5-hour reset cycle forces users to wait if they hit their message limits, interrupting workflow continuity. In comparison, GPT-4o offers more generous daily usage limits, allowing users to work uninterrupted, especially for tasks requiring constant back-and-forth interactions.

2. Conversation Length Restrictions

Claude’s method of reprocessing the entire conversation history with every new message results in rapid token depletion, which severely limits the ability to maintain long conversations:

Token Consumption in Long Conversations: Each time a user sends a message, Claude re-analyzes the previous context, causing tokens to be used up much more quickly. This can lead to frequent interruptions when users need extended dialogues, forcing them to restart conversations and lose valuable context. GPT-4o, by comparison, allows for longer, uninterrupted conversations, making it more suitable for users who need extended, complex interactions.

Workflow Disruptions: Restarting conversations due to token exhaustion breaks the continuity of tasks and can create inefficiencies, especially for users working on complex projects that require sustained input. Users find GPT-4o to be a better fit for projects requiring continuity, as it does not suffer from the same frequent interruptions.

3. Restricted File Size Limits & Number of Uploads

Claude’s restricted file size limits and the number of allowable uploads create significant challenges for users handling data-heavy tasks:

File Size & Upload Constraints: Claude’s restricted file size limits prevent users from uploading large datasets or extensive documents for processing. This constraint hinders workflows for professionals like researchers and analysts who need to handle large amounts of data. In comparison, GPT-4o allows for much larger file uploads, making it a more practical tool for users dealing with substantial files.

Impractical for Data-Heavy Tasks: Users who need to upload multiple or large files, such as datasets for analysis or legal documents for review, find Claude unsuitable due to these restrictions. GPT-4o, with its higher file size and upload capabilities, is the better choice for those handling large amounts of data.

4. Focus on Safety Over Utility & Truth

Claude’s strong emphasis on safety, while commendable, often comes at the cost of practical utility, as its aggressive content filtering blocks many benign queries.

Overly Cautious Filters: Claude frequently blocks queries that it deems potentially harmful or inappropriate, even when the requests are completely benign. For example, users have been blocked or banned for asking innocent questions like health advice or recipe suggestions. This aggressive filtering is seen as overly cautious compared to GPT-4o, which strikes a better balance between safety and usability.

Limited Usability in Certain Scenarios: The overemphasis on safety reduces Claude’s effectiveness for many tasks, as it frequently refuses to engage in queries that users would expect AI to handle. In comparison, GPT-4o delivers more practical responses while still adhering to responsible content guidelines, making it a better option for a broader range of queries.

5. Cost Relative to Other AIs

At $20 per month for the Pro plan, many users feel that Claude does not provide enough value for the price, especially when compared to other models like GPT-4o:

Low Value for Paid Users: Despite paying for the Pro plan, users still face limitations on token usage, file size uploads, and frequent resets. This makes the Pro plan feel less valuable than expected, as many find they run into the same issues that plague free users. Paying customers expect more flexibility, and GPT-4o offers better value by providing more generous usage limits.

Better Alternatives Exist: GPT-4o, for example, offers higher token limits, larger file uploads, and better overall performance at a comparable price. Users frequently switch to GPT-4o for these reasons, feeling that Claude doesn’t offer enough value relative to its competition.

6. Not Always Better Than GPT-4o

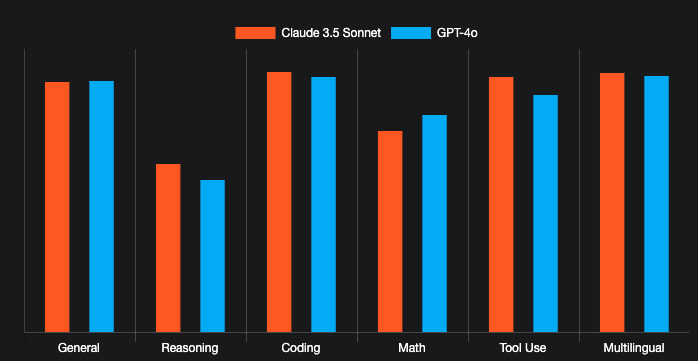

Although Claude performs well in some areas, it doesn’t consistently outperform GPT-4o for general/basic tasks.

Similar General Performance: Claude is subjectively a bit better than GPT-4o for me (but I use both for advanced tasks). For general conversations, I'd guess most people won’t notice much of any difference between the chatbots… Even Gemini is solid for general performance. Certain LLM Leaderboards even have GPT-4o ahead of Claude models for general task performance.

Switching to GPT-4o: Many users, especially those who need a more general-purpose AI that has internet-search capabilities prefer GPT-4o. Claude doesn’t have internet connectivity (I prefer zero internet unless synced with Perplexity).

How Claude Remains an Elite AI

1. The Best Coding Outputs

Despite its shortcomings, Claude excels in coding, delivering some of the best results in code generation and refinement.

According to the SEAL LLM Leaderboards, Claude ranks among the top models for coding tasks.

It performs particularly well in HumanEval coding benchmarks, where its structured outputs and clean code make it a preferred choice for developers over GPT-4o in some cases.

2. Concise High IQ Answers

In my personal experience, Claude 3.5 Sonnet is the current best at providing concise high IQ answers of any chatbot.

It isn’t as good as o1-preview for certain types of queries, but it generally demolishes the competition if the aim is concise + high IQ combo.

o1-preview vomits a Wikipedia page each response and ChatGPT-4o is often lower in accuracy than Sonnet 3.5 (plus its forced auto-internet usage has made its quality drop off the map)… on rare occasions GPT-4o is superior in accuracy to Claude 3.5 Sonnet.

For most advanced queries Claude 3.5 Sonnet outperforms all non-CoT (chain-of-thought) models - and I’ve noticed that on occasion it still beats CoT models or finds errors in their outputs (e.g. o1-preview outputs).

3. Flexible API Access

Claude’s API offers more flexibility than the regular interface, making it a better option for technically skilled users.

Users with technical expertise can bypass some of the token and usage restrictions by integrating Claude’s API into their workflows.

This offers a more customizable and flexible experience, making it a viable option for developers and companies.

4. Ethical Standards for Sensitive Use

Claude’s strong focus on safety and ethical compliance makes it ideal for organizations needing conservative content moderation.

Educational institutions, healthcare providers, and other organizations handling sensitive content may benefit from Claude’s strict adherence to safety guidelines.

Its filters provide reassurance that harmful or inappropriate content will not slip through, making it a trusted option for those in high-stakes environments.

5. Analysis Tool, Visualizations, Artifacts

Claude has an “Analysis Tool” that can run/write code to process data, run analysis, and produce data visualizations in real-time.

I’ve found that the data visualizations are far superior to anything any other AI can generate at the moment - it’s putting ChatGPT to shame.

There are also other tools like “Artifacts” which ensures that its outputs (e.g. code snippets, text docs, web designs, etc.) appear in a dedicated window alongside the conversation (ChatGPT can now do this in “Canvas mode”).

Note: One reason I aspect of Claude that I prefer over ChatGPT is that Claude is fully offline. I think ChatGPT’s Forced/Auto Internet Search Sucks.

My biggest issues with Claude AI (Anthropic AI)

Here are my biggest gripes… and unless the team addresses them or has a significantly better product than ChatGPT – there’s zero chance I’ll be resubscribing to Claude.

If they had a competitively priced premium monthly plan to ChatGPT and focused on toning down some of the woke guardrails - I’d resubscribe immediately.

Usage limits: I hit my usage limits in the blink of an eye with Claude… It seems like Anthropic AI doesn’t really care about paying customers or prioritize them with better usage (compared to ChatGPT). Almost like they’re socialists hoping people spend on premium model so they can give nearly equal usage to non-paying customers.

Woke filters: The entire Anthropic team is infected with the “woke mind virus” in terms of safety. If you ask anything remotely controversial (even if scientifically factual) it flags the content and/or will refuse to answer or provide feedback. This limits its utility especially when engaging in philosophical hypotheticals.

Not “max” truth seeking: I want an AI that gives me the raw truth even for controversial or sensitive topics. Not something that generates “it’s complex” or “complex factors” or some complete BS that is an obvious way to obfuscate or distort objective reality - to avoid offending users.

Walking on eggshells: As a user, I can kind of guess what might trigger some sensitive content warnings and a potential “ban” but I don’t really know. As a result it feels like walking on eggshells if using Claude for philosophical conversations or objective analyses of society. (Read: Claude is amazing but I got banned on my first day).

Bannings: I know multiple people who’ve been banned from Claude for asking what I’d consider benign questions. One individual I know got banned for asking for a specific type of cooking recipe (no joke).

Poor value for $ (relative to ChatGPT): Even if Claude slightly exceeds the abilities of GPT-4o in certain niche domains, it isn’t worth it to me. I can use ChatGPT all day without hitting usage limits and the outputs are really good. ChatGPT also has an internet search that many people like.

Outgunned by o1: Claude has already been somewhat outgunned by the o1 (chain-of-thought) add-on applied by ChatGPT. If you want to use the most premium model on the market today – it’s with OpenAI. Even DeepSeek has a pretty decent CoT model that can be used freely.

Similar to GPT-4o: For certain queries Claude 3.5 Sonnet is noticeably better than GPT-4o… but usually the outputs are comparable. Occasionally GPT-4o is slightly more accurate and/or better than Claude models.

Safetyism, decel, EA: By paying for Claude you’re funding people who think “safety” is censoring objective facts (to avoid hurting feelings). The team is mostly “decel” or against accelerating AI (which will likely benefit humanity more than harming it). And they are aligned with the EA movement and the most EA thing I can think of are: AI acceleration, human experimentation, embryo selection/gene editing - most of which is not supported by Claude and/or the team.

November 2024: Claude 3.5 Sonnet vs. Others

Snapshots taken from Vellum.AI.

What I’d recommend for Claude (Anthropic AI)…

Not that they really care… but I’m going to say it anyway.

My 3 biggest problems with Claude at the moment are: (1) shitty value for paying users relative to competition; (2) impossible platform for “power users” (unless API); and (3) ultra-extreme censorship (almost like it’s in extreme woke mind virus child-mode).

If they increased usage limits to match OpenAI or even raised the price a little but had an unlimited usage plan - I’d say now they’re at least offering something people want.

Increase Value for Paying Users: Prioritize people who are actually willing to pay for Claude. Give premium users more value for their $: higher usage rates, better performance, premium models, enhanced capabilities/features, etc. Scale back or completely eliminate offerings for non-paying users.

Release a Premium “Unlimited” Plan: Trying to be a Claude “power user” is impossible because limits are reached seemingly after just a few requests. Maybe they’re working on this, but if you plan on relying on one AI to massively augment your workflow, Claude is a horrendous option at the moment due to its low limits.

Eliminate or Reduce Censorship & Filters: For everything that is legal, remove the filters and censorship. By keeping heavy censorship, Claude distorts the truth – which could lead to skewed perceptions of reality for the userbase. This isn't true safety. Focus on becoming a max truth seeking AI – not on trying to conceal the truth in fear of woke backlash.

Double Down on R&D (Compete): Focus on leveraging R&D strength to make groundbreaking advancements. In a market saturated with competitors, this could set Anthropic apart and lead to increased dominance.

Secure more investment & hardware: Claude seems like all they care about is creativity, safety, and wokeism. If there were an “effective altruist” AI it would be Claude. Anthropic is extremely efficient and talented with ~375 employees (vs. OpenAI’s 2k) - but they need more $ for hardware.

Do I like using Claude?

I love using Claude… Sonnet 3.5 is my favorite for concise elite non-CoT (chain-of-thought) responses at the moment.

I had an Anthropic subscription for probably 1.5 years, but the final straw was garbage usage limits… I kept hitting limits so fast that Claude became mostly unusable.

Additionally, any analysis of socially sensitive and/or controversial data are met with outright refusals: “Sorry I can’t help with that” (or something similar).

It is often possible to bypass these safety guardrails by essentially proving via conversation that you have no bad intentions (e.g. discrimination, medical self-experimentation, etc.).

Claude is currently (as of Nov 2024) just a bad value for money relative to other options.

At $20 per month I get much higher usage limits with ChatGPT Plus (when 4o runs out I can still use 4o with canvas… when that runs out I can use 4o-mini – which isn’t ideal but still decent.)

I also use Perplexity Pro. There are also some reasonable free AIs like DeepSeek, Gemini, etc. - so I haven’t been motivated to reactivate my Claude subscription.

What do you think about Claude vs. competitors?

To me it seems the Anthropic squad contains highly intelligent, woke/leftist, socialist-minded individuals that restrict paying users to subsidize everyone else.

These aren’t hyper-competitive people ready to assemble a supercluster of 500k Nvidia Blackwell GPUs in under 30 days like xAI… they’d rather jerk each other off in hypothetical theoretical philosophical debates about AI risk, p(doom), etc.

If Anthropic continues to place an outsized emphasis on “safety” (not really safety just their woke censorship/filters) – they may get severely outgunned by OpenAI, xAI, and/or Chinese competitors.

It is known that many former OAI employees now work at Anthropic, but they’re taking an ultra-cautious approach and moving at a snails pace… and for now it seems to be working (as Claude is mostly excellent - just not ideal in terms of usage limits and sensitive content filters).

Note: There is a way to access Claude 3.5 Sonnet without usage limits (albeit more restricted tokens per response) - but you need to use a different platform. I won’t mention because I fear a potential crackdown if it becomes widely known.